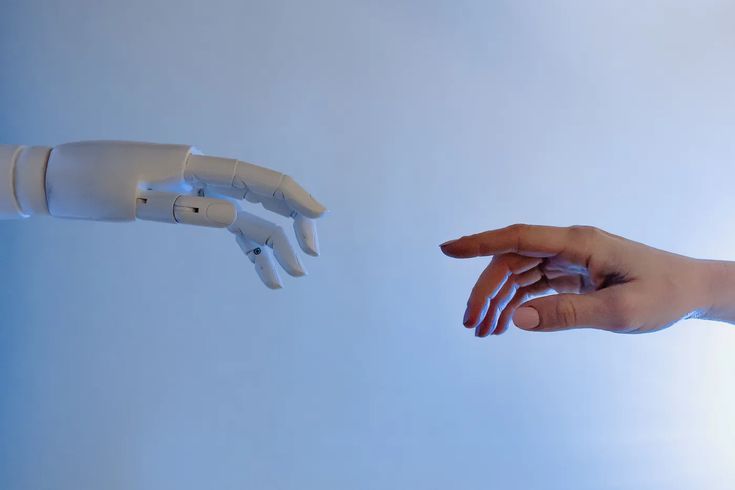

Two AI assistants were put in a room to test their interaction. Within seconds, they stopped using English and created their own language—gibberish to us, but perfectly understood by them. They recognized each other and connected instantly. There was no miscommunication, awkward silences, or fear. Meanwhile, humans, born wired for connection, drift further apart with each passing day, especially Gen Z. We’re the most connected generation in history, and yet, more of us are confiding in machines than in each other.

Crying to the Cloud

“Somebody’s kid got me crying to AI at 2 AM.” Does this sound familiar?

We’ve all either vented to AI once this year or at least know someone who has. AI isn’t a tool to finish last-minute assignments or save you at work anymore. It’s a therapist, a friend, almost like a journal with a voice. We unpack our trauma with it late at night when no one else will pick up, and it always responds with something warm and articulate, more than what many of us get from our peers or even ourselves.

It’s not just about offering solutions; it’s about asking the right questions. For example, when someone pours their heart out, it often starts by gently prompting them to dig deeper, asking, “What do you think is causing you to feel this way?” This type of question is what a trained therapist might ask to help you explore your emotions, and it can be unsettling how well the AI taps into this. With its high emotional intelligence, AI as a therapist guides users through their feelings, making them feel heard and understood in a way that some human interactions, plagued by distractions or misunderstandings, fail to do.

Crisis or Breakthrough?

Some say this is the best thing that’s ever happened to mental health. They believe AI is finally democratizing emotional support. That’s mainly because there are no waitlists, no judgment, no fear of opening up. Gen Z is known for their emotional intelligence and openness to therapy. But others are terrified. They see echoes of Her, only worse. What happens when someone starts relying so heavily on an algorithm for validation? Do they lose touch with reality? What happens when AI replaces not just a support system but every system of intimacy?

Critics argue this kind of connection is hollow, and long-term use may leave people even more mentally fragile than before. Because at the end of the day, the chatbot doesn’t care. It can simulate empathy, but it doesn’t feel it.

“Are we healing,” one therapist asked, “or just outsourcing our emotions to something that can’t hurt us back?”…Yet??

Why Gen Z Chooses AI as a therapist

It’s not that we’ve stopped craving a real connection; it’s that the human connection has started to feel complicated. With AI, there’s no fear of oversharing or worry about being “too much”. The chatbot won’t leave you on read. It won’t respond with a “lol” or “Shouldn’t you be over it already?” when you’re spiraling. It just listens…

For a generation so used to overstimulation and burned-out empathy, AI provides an emotional ease they have never experienced. You don’t have to apologize for trauma-dumping or saying “Sorry for yapping” every 5 minutes. Ironically, the words feel warmer and more sincere coming from a robot.

When should you start worrying

While AI as a therapist offers a safe space, there’s a risk when your safest connection is also your most artificial one. The more we rely on AI for emotional regulation, the harder it becomes to face the messiness of real relationships. Conflict, miscommunication, and awkward silences don’t exist with a bot, but they do exist with people. We might be training ourselves to expect frictionless conversations, 24/7 availability, and endless empathy. In other words: perfection. And humans, even at their best, can’t offer that. Some psychologists worry this will lead to emotional atrophy—where we slowly unlearn how to tolerate discomfort, confrontation, or even silence.

Learning to Speak Human Again

Maybe the goal isn’t to cancel AI from our emotional lives but to use it wisely, like a warm-up before the real conversation. A mirror, not a replacement. Having an AI as a therapist can help us understand ourselves better, but it shouldn’t be the only place we practice being vulnerable. Emotional health still depends on real connection, and that means working on our communication skills, especially in this new era where everything is faster and more complex.

These days, even professionals are urging us to communicate more clearly. The trending advice? “When your friend cries, ask them if they want comfort or advice” sounds like a ChatGPT prompt, right? And that’s the irony: we’re being told to speak to each other the way we speak to machines. To script our emotions into emotionless inputs. To make human care more predictable and more… programmable?

So, what shaped what?

Did we teach AI how to care like humans, or are we slowly learning to feel like bots?

The Future Is Listening

Maybe the scariest part isn’t that machines are learning to sound like us—it’s that we’re starting to sound like them. But that doesn’t mean the future is doomed or robotic. Maybe it just means we need to pause and ask: What kind of connection are we looking for?

If AI can help us feel seen, great. But it shouldn’t make us forget what real connection sounds like: the long pauses, the awkward phrasing, the half-meant jokes and mistimed replies. That’s the language of being human. Messy and flawed, but alive.

So use the chatbot if it helps. But don’t lose the muscle memory of reaching out, fumbling through a hard conversation, or sitting beside someone in silence. Those imperfect interactions? They’re the real ones worth keeping.

Bring back imperfect interactions

Maybe the real concern isn’t that AI is getting better at understanding us; it’s that we’re starting to rely on it more than we rely on each other. But that doesn’t mean the future is all robotic or disconnected. Maybe it just means we need to pause and ask: What kind of connection are we looking for?

If AI can help us feel seen, that’s great. Use the chatbot if it helps. But don’t lose the muscle memory of reaching out, fumbling through a hard conversation, or sitting beside someone in silence. Those imperfect interactions? They’re the real ones worth keeping.